Editor's Note: A previous version of this post ran on The New Stack.

The exponential growth of microservices is nothing short of astounding. It’s even spawned a joke that reached its peak virality only recently.

Joe Nash, a developer educator at Twilio, jested that a few influential engineers discussing a burgeoning microservice would be enough for it to quickly become a mainstay in the wider tech community. He came up with a fictional new hashing service from AWS called Infinidash to illustrate his point. Joe was right. Talk of Infinidash dominated social circles, culminating in a joke job posting from popular messaging application Signal citing Infinidash as a central component of their ecosystem.

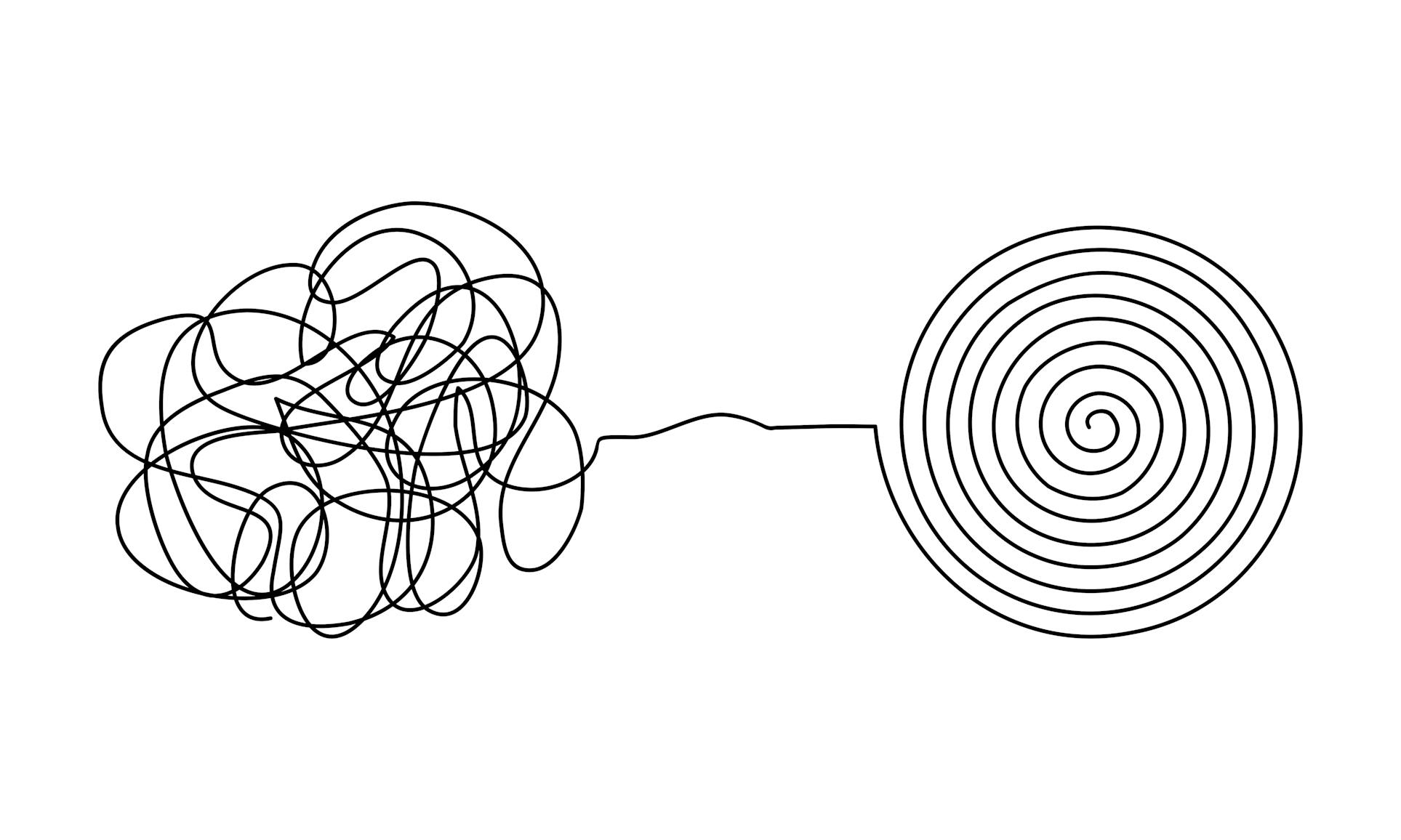

The joke around this fictitious product generated laughs and hot takes, but aside from its criticism of the fickle nature of a hype cycle, the salient point of the matter comes through loud and clear. While microservices enable rapid feature iteration for developers and faster delivery cycles for companies, they can quickly turn into an unwieldy mess that require massive levels of investment of both time and money. Indeed, as microservices have grown from a niche methodology into a standard, they’ve also become more challenging to manage and govern.

The art and science of platform engineering has risen to popularity, in part, to make sense of this complex ecosystem. It’s not unthinkable that a team could be running hundreds of microservices, even more instances, and thousands of checks in any given hour. Case in point, Monzo detailed its 1,500 microservices in a now-famous blog post about its network isolation project.

Platform engineering examines the entire software development life cycle from start to finish. The outcomes of this discovery process inform the design, build, and maintenance of an abstraction and automation framework created to empower the application development life cycle. A typical workflow might include a source control system connected to a continuous integration system, which is then deployed into production.

But as more teams are outfitted with self-serve platforms, the needs of the platform can rapidly evolve. Application development teams often require differing workflows for continuous integration (CI), continuous delivery (CD) and deployment. And with this growth in complexity comes a growth in solutions. Beyond the sheer headache you’re potentially walking into, an architecture comprised entirely of microservices can become costly and a source of much risk when we take the likelihood of misconfigurations into account, not to mention the cacophony of alerts you can find yourself faced with in the event of a mishap.

It’s All About Timing

In a distributed system, despite appearing to be opposition to a monolith, services are never fully independent. You’ve also got to solve for latency. Say you have a service reading from a database, you now also need to account for additional dependencies along with asynchronous calls. Suppose your team hasn’t taken into account the manner and sequence of how these calls are made. You’ll quickly accumulate a backlog of notifications resembling the winning animation you see when you complete a desktop game of Solitaire.

When working with microservices, you must think critically about monitoring strategy to create a system that your current team can support. Resist the temptation to design for the future, and focus on scalability and realistic maintainability.

Create a Meaningful Crescendo

The interdependency of your microservices-based architecture also complicates logging and makes log aggregation a vital part of a successful approach. Sarah Wells, the technical director at the Financial Times, has overseen her team’s migration of more than 150 microservices to Kubernetes. Ahead of this project, while creating an effective log aggregation system, Wells cited the need for selectively choosing metrics and named attributes that identify the event, along with all the surrounding occurrences happening as part of it. Correlating related services ensures that a system is designed to flag genuinely meaningful issues as they happen.

In her recent talk at QCon, she also notes the importance of understanding rate limits when constructing your log aggregation. As she pointed out, when it comes to logs, you often don’t know if you’ve lost a record of something important until it’s too late.

A great approach is to implement a process that turns any situation into a request. For instance, the next time your team finds itself looking for a piece of information it deems useful, don’t just fulfill the request, log it with your next team’s process review to see whether you can expand your reporting metrics.

The Importance of Order

The same thing goes for the alerts that you do receive. Are you confident that you understand them all? This is in no way meant to be a “call out,” but rather a question designed to help you pause for thought and rethink your approach. If you don’t understand an alert, you’re likely not the only one on your team lacking clarity. Ensure that your team uses a shared vocabulary for rank of importance, and most importantly, that different areas of your infrastructure are assigned specific owners to retain clear reporting lines.

Lastly, don’t let things get too big. As it happens, people love using microservices. These findings were confirmed in last year’s Microservices Adoption survey by O’Reilly, in which 92% of respondents reported some success with microservices and over half (54%) charted their experience as “mostly successful.”

Our inherent preferences can easily lead to microservices becoming distributed monoliths. To standardize working methods, teams can find their flexibility compromised in an effort to accommodate personal preferences. Having a firm set of requirements to qualify something as fit for purpose, along with a level of automation to prevent a service from being stretched beyond capacity, will help to deter a spate of over-eager adoption.

With feature flags, engineering teams can have complete control over growing microservices. Wrapping microservices in feature flags provides the ability to re-route your services when most needed and isolate issues if disaster strikes.

Do you work in platform engineering and have your own tales of how you’ve implemented self-service abstractions, teamed up with site reliability engineers or even created a solution that bridges the gap between hardware and software infrastructure? If so, we’d love to have you join us at our third annual conference, Trajectory, which takes place Nov.9-10.

.png)